How to optimize the cost of a spot instance with the Ansys LS-DYNA checkpoint feature

This post was written by Dnyanesh Digraskar, Senior Partner Solutions Architect (HPC) and Amit Varde, Senior Partner Development Manager.

Benefits of high performance computing (HPC) workloads from various organizations, such as high availability, flexible capacity, modern processors and storage, networks — and these are pay-as-you-go — Because of this, we are migrating from our on-premises infrastructure to Amazon Web Services (AWS). These benefits benefit engineering teams by reducing costs and enabling faster results for CPU- and memory-intensive workloads such as Finite Element Analysis (FEA). It benefits to.

When running a FEA workload on his AWS, the cost-dominant factor is Amazon EC2 instance usage fees. Amazon EC2 Spot Instances are a cost-effective choice for these situations. Spot Instances is a purchasing option that leverages the unused capacity of Amazon EC2 services and allows you to use EC2 instances at a discount of up to 90% compared to the price of your on-demand instance.

In this article, I'll show you how to combine Ansys LS-DYNA's checkpoint capabilities and auto-restart utility with Spot Instances to build his fault-tolerant FEA workload while still benefiting from the cost savings of Spot Instances. increase.

How Spot Instances Work

Spot Instances are the unused capacity of EC2 services in the AWS Cloud and are offered at a significant discount compared to the on-demand instance price. Instead, it is characterized by interruptions. The rule is to return the capacity used by the Spot Instance when the EC2 service needs capacity. EC2's free capacity is prepared by the EC2 service to serve any request at any time, with over 375 instance types across 77 Availability Zones in 24 regions. Thing. As a result, there are some parts that were not used, and the idea of Spot Instance is to provide them without playing.

The available capacity and location (regions and availability zones) of EC2 services available at any given time is dynamic and changes in real time. That's why it's said that it's important for Spot users to run only workloads that can be interrupted on Spot Instances. In addition, Spot Instance workloads should be flexible enough to move to where there is surplus capacity in real time (or stop until surplus capacity reoccurs). See this white paper for more details on how Spot Instances work and the various usage scenarios.

Overall solution

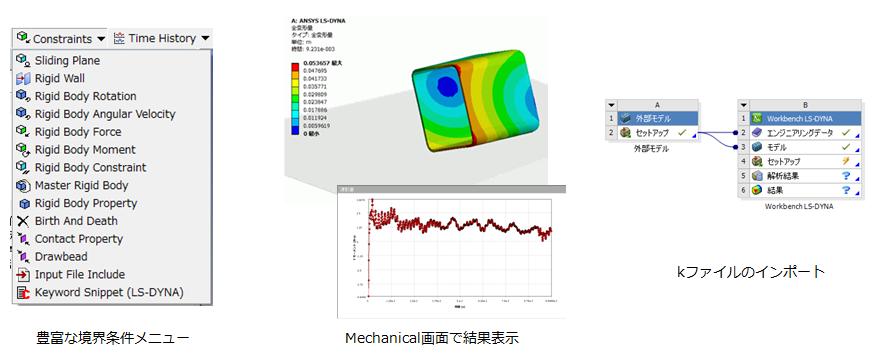

The Ansys LS-DYNA checkpoint utility, known as the lsdyna-spotless toolkit, uses a mechanism for monitoring simulation jobs on Spot Instances, as shown in the following configuration diagram (Figure 1).

Figure 1: Job restart and temporary interrupt monitoring configuration

The flow shown in the above configuration diagram is as follows.

- The user submits a single (or set) Ansys LS-DYNA job at the head node of the cluster.

- Each job is divided into multiple Message Passing Interface (MPI) tasks based on user settings.

- A monitor daemon

pollis sent to each compute node to poll for interruption signals from EC2 metadata for all MPI tasks. - When it receives the interruption signal, it creates a checkpoint for the running simulation and saves it to a

/shareddrive accessible by both the head node and the compute node. - The job restart daemon

job-restartera job to the cluster's queue when the requested capacity becomes reusable.

Now you have an overview of how utilities and commands work. Now let's take a look at the details of how to set up an Ansys LS-DYNA simulation environment with the ldsyna-spotless utility. This will give you an overview of instance costs and their implications.

Setting up the simulation environment

To set up the LS-DYNA environment on the head node of the cluster:

- Download the latest Ansys LS-DYNA and install it on the head node. This article uses version R12. Download and unzip the toolkit from the GitHub repository.

- Set some customization options in

env-var.sh. - Set the

MPPDYNAvariable to the path of the Ansys LS-DYNA executable file. - Set the license server and SLURM queue to variables.

$ export LSTC_LICENSE_SERVER=” IP-address-license-server ”$ export SQQUEUE=” your-SLURM-queue-name ” -

env-vars.shwith the source command and set environment variables.$ source env-vars.sh - Copy all the tools included in the package to the path set in step 2.

$ cp * /shared/ansys/bin

Job launch

Now that you've set up the required preferences, let's submit a fault-tolerant Ansys LS-DYNA job with the checkpoint utility enabled.

Each job is expected to have a unique SLURM job script and a directory with a unique name. Also, Ansys LS-DYNA requires a file named main.k as the main input data. If the file name is different, change it or create a symbolic link.

To start the job, use a command like the following:

$ start-jobs 2 72 spotq.slurm job-1 job-2 job-3This command submits his MPI jobs for three Ansys LS-DYNAs. Each job has 2 nodes and 72 tasks per node, so there are 144 tasks per job.

The following example is a sportq.slurm job script for the SLURM scheduler.

#!/bin/bash#SBATCH -J job # Job name#SBATCH -o job.%j.out # Name of stdout output fileINPUTDECK="main.k"if ls d3dump* 1>/dev/null 2>&1; thenmode="r=$(ls -t d3dump* | head -1 | cut -c1-8)"op="restart"elsemode="i=$INPUTDECK"op="start"fi# create/overwrite checkpoint command fileecho "sw1." >switch# launch monitor tasksjob_file=$(scontrol show job $SLURM_JOB_ID | awk -F= '/Command=/{print $2}')srun --overcommit --ntasks=$SLURM_JOB_NUM_NODES --ntasks-per-node=1 $SQDIR/bin/poll "$SLURM_JOB_ID" "$SLURM_SUBMIT_DIR" "$job_file" &>/dev/nullLaunch MPI-based executableecho -e "$SLURM_SUBMIT_DIR ${op}ed: $(date) | $(date +%s)" >>$SQDIR/var/timings.logsrun --mpi=pmix_v3 --overcommit $MPPDYNA $modeecho -e "$SLURM_SUBMIT_DIR stopped: $(date) | $(date +%s)" >>$SQDIR/var/timings.logTo stop a running job, use the following command:

$ stop-jobsImpact on execution time and cost

We submitted a total of 10 jobs to two c5.18xlarge spot instances and assigned 144 MPI tasks to each job using the lsdyna-spotless toolkit. The monitoring utility monitors the status change of each job and checks whether there is an interrupt. In order to analyze the start and end times of the job, the suspended time is excluded so that the actual execution time can be estimated. He can also see the overhead of a Spot Instance interruption by comparing the total time, including the interruption time, with the execution time. There is also a utility (calc-timing) for easily aggregating time data, and for this 10 job example, you'll get the following output:

$ calc-timing ../var/timings.oldjob /shared/lstc/neon finished in 1543 seconds, after interrupt (s).job /shared/lstc/neon-9 finished in 1308 seconds, uninterrupted.job /shared/lstc/neon-8 finished in 1333 seconds, uninterrupted.job /shared/lstc/neon-1 finished in 1478 seconds, after interrupt (s).job /shared/lstc/neon-3 finished in 1279 seconds, uninterrupted.job /shared/lstc/neon-2 finished in 1537 seconds, after interrupt (s).job /shared/lstc/neon-5 finished in 1313 seconds, uninterrupted.job /shared/lstc/neon-4 finished in 1295 seconds, uninterrupted.job /shared/lstc/neon-7 finished in 1334 seconds, uninterrupted.job /shared/lstc/neon-6 finished in 1304 seconds, uninterrupted.10 jobs finished and they finished in 13724 seconds in CPU time.Wall clock time: 14669 seconds elapsed.The interruptions in the above example were manually caused by the Amazon EC2 metadata mock tool and do not represent the actual performance and availability of your Spot Instances.

Three of the ten jobs are interrupted. The execution times of the three jobs affected by the interruption have increased by an average of 11%. However, with an 11% increase in execution time, as shown in Figure 2, we are reducing costs by about 60% compared to on-demand instances. In the figure, the execution time is represented by a blue bar graph and the total instance cost is represented by a red triangle.

Figure 2: Execution time and cost comparison using Amazon EC2 on-demand and spot instances for Ansys LS-DYNA jobs

Cluster settings and AWS services

The configuration of the HPC cluster built on AWS for the testing in this article is shown in Figure 3 below. In this case, we used a C5 spot instance of Amazon EC2 compute optimization. The Amazon EC2 C5.18xlarge instance has 36 cores of Intel Skylake-SP CPU at 3.4GHz. An Amazon Elastic File System (EFS) drive was attached to the head node to help store and share application files with compute nodes. Simulation checkpoint information collected from each compute node is also stored on the Amazon EFS drive.

The following is a block diagram.

Figure 3: Configuration for running LS-DYNA on AWS with ParallelCluster

AWS ParallelCluster is an open source cluster management tool supported by AWS that you can use to deploy and manage your HPC clusters. AWS ParallelCluster uses the SLURM job scheduler as a prerequisite for running the Ansys LS-DYNA checkpoint utility. The Amazon Elastic File System (Amazon EFS) is a flexible file system that you can use for file sharing without having to manage your own storage. Sharing an Amazon EFS drive is great for storing application checkpoint data in the sense that it is seamlessly accessible from all compute nodes.

To further utilize Spot Instances

The configuration of monitoring and repopulating spot instance interruptions using the Ansys LS-DYNA checkpoint utility described in detail above enables more fault-tolerant operations in the simulation environment.

One such method is to diversify the instance types, that is, to run an Ansys LS-DYNA simulation with multiple instance types. This can be achieved using AWS ParallelCluster's multi-queue and multi-instance capabilities. See the Using multiple queues and instance types in AWS ParallelCluster 2.9 blog post for more information. With EC2 capacity and multiple instance types, HPC clusters have less potential disruption and more flexibility in submitting jobs.

in conclusion

Engineering has made it possible to effectively run fall-tolerant Ansys LS-DYNA simulations that leverage Amazon EC2 Spot Instances. It also achieves cost savings of up to 60% compared to on-demand instance prices.

In this article, I showed that Ansys LS-DYNA's new checkpoint utility allows you to run FEA workloads while taking into account spot instance interruptions. The utility can also automatically resubmit jobs to the HPC cluster's queue when instance capacity returns.

If you're interested in running FEA and other HPC workloads with Amazon EC2 Spot Instances and Ansys LS-DYNA, more information can be found on his Ansys LS-DYNA solution page.

This article was translated by Solution Architect Ono from "Cost-optimization on Spot Instances using checkpoing for Ansys LS-DYNA" posted on September 27, 2021.